Webarc:Tools Developed: Difference between revisions

From Adapt

No edit summary |

No edit summary |

||

| (One intermediate revision by the same user not shown) | |||

| Line 13: | Line 13: | ||

== Indexers == | == Indexers == | ||

*[[Webarc:Lemur Indexer (modified)|IndriBuildIndex: Lemur Indexer (modified)]] (C++): Based on the Lemur toolkit, we added additional input source support, namely Berkeley DB (for the Carryover DB). We also added extra statistics parameters for temporal scoring support. In particular, the modified index now also includes 'fresh document counts' (the number of non-carried-over documents) and 'term counts for fresh documents' (the number of terms within non-carried-over documents), | *[[Webarc:Lemur Indexer (modified)|IndriBuildIndex: Lemur Indexer (modified)]] (C++): Based on the Lemur toolkit, we added additional input source support, namely Berkeley DB (for the Carryover DB). We also added extra statistics parameters for temporal scoring support. In particular, the modified index now also includes 'fresh document counts' (the number of non-carried-over documents) and 'term counts for fresh documents' (the number of terms within non-carried-over documents), both for the entire index and for each individual term in the index. | ||

[[Image:index.png|480px|Indexing Flowchart]] | [[Image:index.png|480px|Indexing Flowchart]] | ||

| Line 29: | Line 29: | ||

*[[Webarc:PBS Jobs Scripts for Searching|PBS Jobs Scripts for Searching]] (Bash): These scripts allow search servers and clients to be deployed over multiple nodes in the Chimera cluster. For n nodes, and i indexes, each node is made responsible for i/n indexes. Indexes are distributed in an interlaced fashion: i.e. node 1 has index 1, n+1, 2n+1, ... and node 2 has index 2, n+2, 2n+2, ..., and so on. | *[[Webarc:PBS Jobs Scripts for Searching|PBS Jobs Scripts for Searching]] (Bash): These scripts allow search servers and clients to be deployed over multiple nodes in the Chimera cluster. For n nodes, and i indexes, each node is made responsible for i/n indexes. Indexes are distributed in an interlaced fashion: i.e. node 1 has index 1, n+1, 2n+1, ... and node 2 has index 2, n+2, 2n+2, ..., and so on. | ||

[[Image:search.png| | [[Image:search.png|480px|Distributed Search]] | ||

== Result Analysis == | == Result Analysis == | ||

Latest revision as of 22:40, 10 November 2009

Input Data Preprocessors

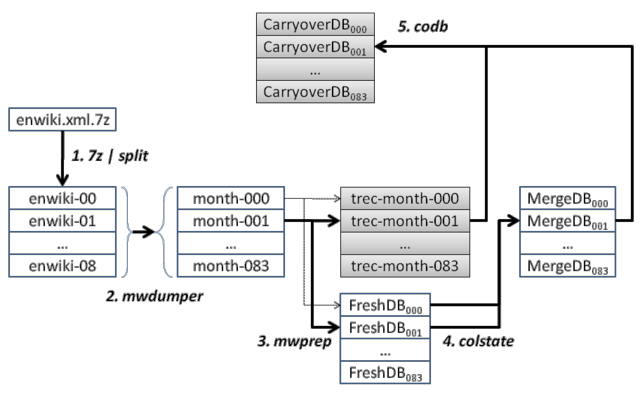

- mwdumper: LRE Monthly Dumper (Java): Based on mwdumper, this tool extracts from the MediaWiki XML dump a monthly snapshot at the end of each month. It also filters out minor edits and redirects.

- mwprep: MediaWiki-to-TREC Converter (Java): Also based on mwdumper, this tool converts the default MediaWiki XML dump format to the TREC-complaint format. For our experimental purposes, it also constructs a Java Berkeley DB for each month, which we call 'Fresh DB'. A 'Fresh DB' contains records corresponding to all wiki articles updated within the month. Each record in DB has the form of { docID, (revision date, file name, offset) }.

- colstate: Merge DB Constructor (Java): This tool constructs Merge DB for each month, which contains the union set of records between Merge DB of the previous month and Fresh DB of the current month. I.e. for month m, <math>MergeDB_m = MergeDB_{m-1} \cup FreshDB_m</math>. Since constructing a Merge DB for each month requires an existing Merge DB for the previous month, this tool needs to be run sequentially from the first month to the last month.

- codb: Carryover DB Constructor (Java): By comparing Fresh DB and Merge DB for each month, this tool identifies the Wiki articles that need to be carried over from the previous month (i.e. those that only appear in Merge DB, not in the monthly snapshot). It constructs yet another DB (Carryover DB) for each month that contains these carryovers. I.e. for month m, <math>CarryoverDB_m = MergeDB_m - FreshDB_m</math>.

For our experiments, we have run the tools listed above sequentially to obtain monthly snapshots of Wikipedia articles from 2001 to 2007, and also to identify, for each month, the articles that do not have new revisions in the current month, thus need to be carried over and indexed for the current month.

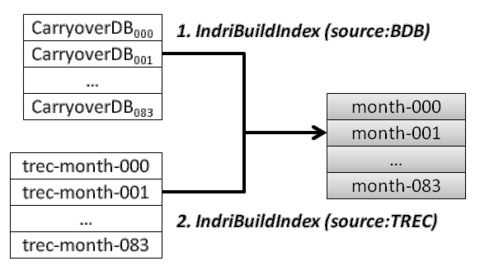

Indexers

- IndriBuildIndex: Lemur Indexer (modified) (C++): Based on the Lemur toolkit, we added additional input source support, namely Berkeley DB (for the Carryover DB). We also added extra statistics parameters for temporal scoring support. In particular, the modified index now also includes 'fresh document counts' (the number of non-carried-over documents) and 'term counts for fresh documents' (the number of terms within non-carried-over documents), both for the entire index and for each individual term in the index.

Distributed Index Support

- PBS Jobs Scripts for Indexing (Bash): These scripts allow indexers to be deployed over multiple nodes in the Chimera cluster.

Retrievers

- Temporal Okapi Retrieval Method (C++): Based on the Okapi Retrieval Method implemented as a part of the Lemur toolkit, we added Temporal Okapi Retrieval Method that takes the extra statistics parameters (that we included in the modified version of Lemur Indexer) into account for temporally-anchored scoring.

- Temporal KL Retrieval Method (C++): Based on the Simple KL Retrieval Method implemented as a part of the Lemur toolkit, Temporal KL Retrieval Method uses the extra statistics parameters (that we included in the modified version of Lemur Indexer) for temporally-anchored scoring.

Distributed Search Support

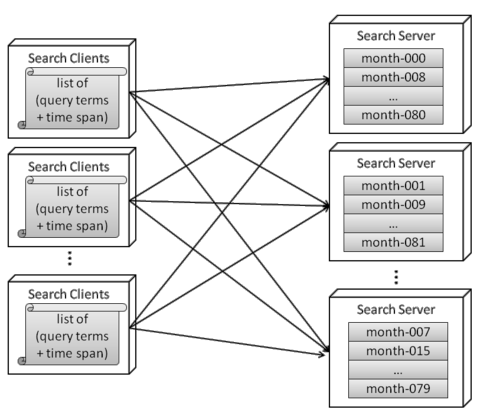

- tsearch: Temporal Search Client/Server (C): Using RPC, a search server provides two remote procedures for clients: getstats and search. Getstats returns statistics (such as doc count, term count, fresh doc count, ...) upon a provisional query, while search returns the actual search results for a query. Each search server is multithreaded and can handle multiple indexes simultaneously. Each search client is also multithreaded and issues a search request to multiple servers simultaneously.

- PBS Jobs Scripts for Searching (Bash): These scripts allow search servers and clients to be deployed over multiple nodes in the Chimera cluster. For n nodes, and i indexes, each node is made responsible for i/n indexes. Indexes are distributed in an interlaced fashion: i.e. node 1 has index 1, n+1, 2n+1, ... and node 2 has index 2, n+2, 2n+2, ..., and so on.

Result Analysis

- Trec_Eval (modified) (C): We added Kendall's Tau support in the existing Trec_Eval tool.

Miscellanea

- Berkeley DB Wrapper for Carryover DB (Java): A simple and elegant wrapper for any C/C++ implementations that want to access Carryover DB (which is based on Java Berkeley DB) via Java JNI.