Webarc:PISA

From Adapt

Overview

When archived web pages are stored in containers, an external index is set up and managed that maps a URL and crawl time to the ID of the container and the offset where the corresponding information is archived. Without an external index, a sequential look up through all containers to search for the web information will take an enormous amount of time for any significant size web archive.

Previous indexing schemes construct a B+-Tree in which each key entry consists of the concatenation of a URL and crawl time. Based on the concatenated key, a search through the B+-Tree leads to a container ID and an offset where the corresponding content has been stored. Clearly the B+-Tree will have separate entries for the same URL with different crawl times, and the corresponding web content will be stored separately for every crawl even though the content may not change over the past several crawls. To avoid storing any duplicate web content and yet maintain the ability to recover the contents corresponding to a URL and a date, we use techniques that are similar to those introduced for persistent or multi-version data structures .

Operations

The followings are some of the notable operations that PISA supports.

- (URL, time) Insert: Given an object defined by a URL and a crawl time, insert the information regarding the object into the indexing structure with a pointer to its container and the offset within the container.

- (URL, time) Query: Given a URL and a time, determine the location of the content of the URL that was valid at the specified time. The location refers to the container ID and the offset within the container. (URL, now) Query is a special case of this type of query, which determines the location of the most-recent content of the URL.

- (URL, time span) Query: Given a URL and a time span, determine the locations of the contents of the URL that were valid during the specified time span.

- (URL, ---) Query: Given a URL, determine all the locations and crawl times corresponding to the URL.

- (----, time span) Query: Given a time span, determine all the URLs and their corresponding locations in the archive, which were valid at the specified time span.

Performance

Our PISA indexing structure is somewhat simpler and more practical than those reported in the literature, but achieves the same asymptotic performance for our dynamic operations as the best known schemes.

| (URL,time) Insert | (URL,now) Query | (URL,time) Query | (URL,time span) Query | (URL,---) Query | (---,time span) Query | Space |

|---|---|---|---|---|---|---|

| <math> \textstyle O(log_B M) </math> | <math> \textstyle O(log_B M) </math> | <math> \textstyle O(log_B N) </math> | <math> \textstyle O((log_B N)+\frac{R}{B}) </math> | <math> \textstyle O((log_B N)+\frac{R}{B}) </math> | <math> \textstyle O(???TBD???) </math> | <math> \textstyle O(\frac{R}{B}) </math> |

B: block size N: the number of all entries M: the number of live entries R: the number of results

Duplicate Detection

Besides PISA's main role as a location index, another place that PISA can be useful is in duplicate detection. Duplicate detection can be done by, in addition to the location information, storing hash of the content in leaf nodes of PISA, and comparing the hash of the current content with the one stored in PISA for the most recent content. Since queries to the most recent version takes only <math>O(log_B M)</math>, they can be performed very quickly, being independent of the total data size which keeps grows over time.

We have performed duplicate detection experiments on two different datasets: New Websites and Governors Websites. News Websites is from a Stanford’s event-based collection pertaining to the 2006 US House of Representatives Election, which was originally crawled from October 25, 2006 to November 11, 2006. Since news websites tend to be updated very frequently (several times a day), an archive collection obtained by a series of daily crawls is less likely to contain duplicate web pages. Governors Websites is from a Stanford’s event-based collection pertaining to Hurricane Katrina, originally crawled from September 3, 2005 to October 29 over eight State Governors' websites (Alabama, Mississippi, North Dakota, New Mexico, Oklahoma, Pennsylvania, Texas and Louisiana). Since updates in government websites are usually slow-paced, this dataset is representative of an archiving scenario over slow changing websites, where duplicates are expected to occur more frequently.

| Days of Crawls | Crawl Dates | Avg # of Pages / Crawl | Total # of Pages | Avg Size / Crawl | Total Size | |

|---|---|---|---|---|---|---|

| News Websites | 8 | 10/25/06 ~ 11/02/06 | 298,269 | 2,335,600 | 9.32GB | 73.38GB |

| Governors Websites | 28 | 09/08/05 ~ 10/07/05 | 10,055 | 273,717 | 228MB | 5.98GB |

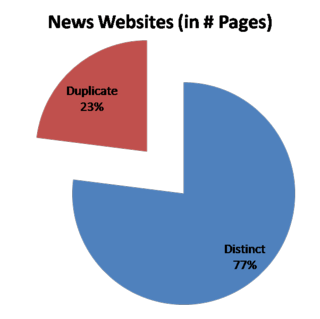

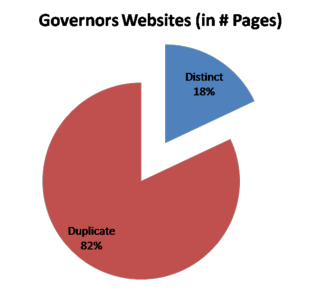

The following two graphs show the percentage of the number of duplicate web pages detected in News Websites and Governors Websites, respectively.

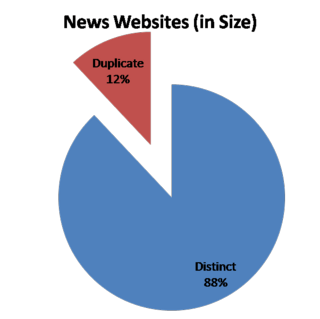

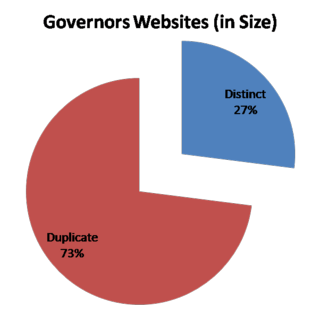

The following two graphs show the percentage of the byte size of duplicate web sites.

The time overheads to process the two datasets are in the following table.

| Total | Per Page (Average) | Per MB(Average) | |

|---|---|---|---|

| News Websites | 13m 52s | 0.356ms | 11.076ms |

| Governors Websites | 27s | 0.098ms | 4.444ms |

Publication

Song, S. and JaJa, J., Archiving Temporal Web Information: Organization of Web Contents for Fast Access and Compact Storage:UMIACS-TR-2008-08. 2008, University of Maryland Institute for Advanced Computer Studies. pdf