Webarc:Packaging: Difference between revisions

From Adapt

No edit summary |

No edit summary |

||

| Line 3: | Line 3: | ||

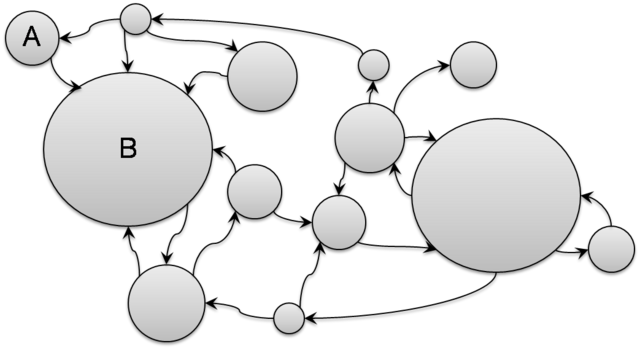

Web can be considered as a weighted, directed graph where web pages are vertices and hyperlinks are edges in the graph. The size of a page is the weight of the corresponding vertex. | Web can be considered as a weighted, directed graph where web pages are vertices and hyperlinks are edges in the graph. The size of a page is the weight of the corresponding vertex. | ||

[[Image:webgraph.png]] | [[Image:webgraph.png|640px]] | ||

=== Web Containers === | === Web Containers === | ||

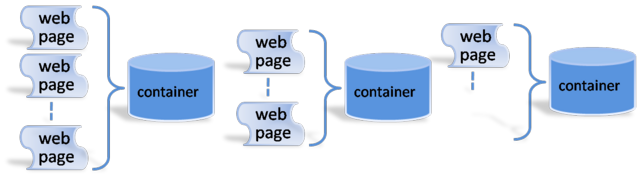

There are many different ways to archive the web. One of the most popular methods is through use of containers. In this container method, a multitude of web pages are aggregated into a container. An emerging standard for such containers is the WARC format, which evolved from the ARC container format developed by the Internet Archive, currently the world’s largest internet archive. | There are many different ways to archive the web. One of the most popular methods is through use of containers. In this container method, a multitude of web pages are aggregated into a container. An emerging standard for such containers is the WARC format, which evolved from the ARC container format developed by the Internet Archive, currently the world’s largest internet archive. | ||

[[Image:webcontainers.png]] | [[Image:webcontainers.png|640px]] | ||

== Motivation == | == Motivation == | ||

| Line 17: | Line 17: | ||

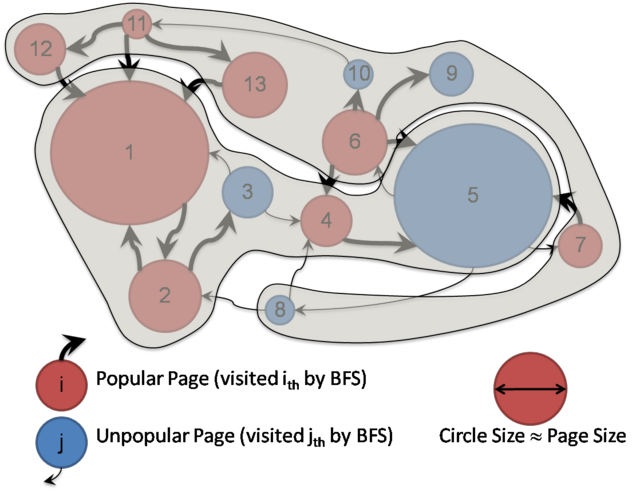

Although simple and straightforward, one problem with this simple packaging approach appears at access time. That is, tightly related pages can end up in different containers, and more number of containers will be necessary in a random browsing session. | Although simple and straightforward, one problem with this simple packaging approach appears at access time. That is, tightly related pages can end up in different containers, and more number of containers will be necessary in a random browsing session. | ||

[[Image:conv_container_packaging.png]] | [[Image:conv_container_packaging.png|640px]] | ||

== Our Goal == | == Our Goal == | ||

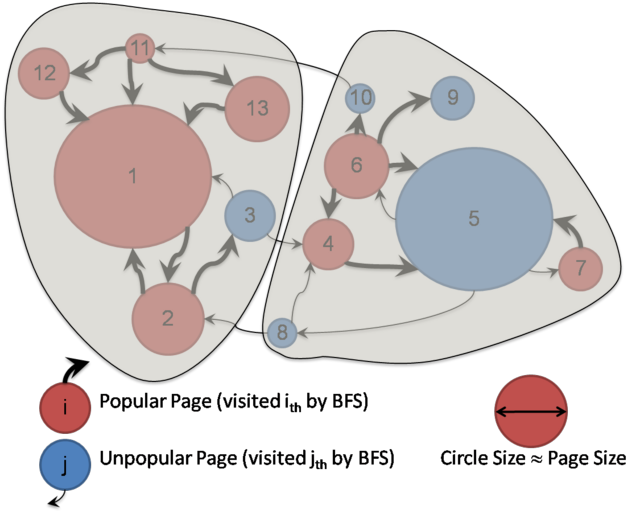

We aim to develop a methodology to minimize the probability that a user jumps often between containers when accessing the web archive. | We aim to develop a methodology to minimize the probability that a user jumps often between containers when accessing the web archive. | ||

[[Image:ideal_container_packaging.png]] | [[Image:ideal_container_packaging.png|640px]] | ||

== Our Approach == | == Our Approach == | ||

Revision as of 15:50, 24 September 2008

Background

Web Graph

Web can be considered as a weighted, directed graph where web pages are vertices and hyperlinks are edges in the graph. The size of a page is the weight of the corresponding vertex.

Web Containers

There are many different ways to archive the web. One of the most popular methods is through use of containers. In this container method, a multitude of web pages are aggregated into a container. An emerging standard for such containers is the WARC format, which evolved from the ARC container format developed by the Internet Archive, currently the world’s largest internet archive.

Motivation

Conventional Container Packaging

The conventional way to construct containers is rather naive. Most web crawls are based on Breadth First Search or Depth First Search, and throughout the crawl session, first seen pages are put into a container until the container gets full. When the container gets full, a new container is created for the pages to be seen next. The process repeats itself until the crawl session ends.

Problem with the Conventional Container Packaging

Although simple and straightforward, one problem with this simple packaging approach appears at access time. That is, tightly related pages can end up in different containers, and more number of containers will be necessary in a random browsing session.

Our Goal

We aim to develop a methodology to minimize the probability that a user jumps often between containers when accessing the web archive.

Our Approach

Graph Analysis Technique

Based on PageRank, we compute EdgeRank for each edge that represents the global probability of the edge to be taken.

<math> EdgeRank(u) = \frac{ \displaystyle \frac{1-d}{N} + d \sum_{v \in I_u} p_{vu} PR(v)}{outdegree(v)}</math>, where <math>N</math> is the number of pages, <math>d</math> is a damping factor (=0.15), <math>I_u</math> is a set of pages that have hyperlinks to Page <math>u</math>, <math>p_{vu}</math> is the probability of the hyperlink from Page <math>v</math> to Page <math>u</math> to be taken, and <math>PR(v)</math> is the PageRank of Page <math>v</math>

Graph Partitioning Technique

Given a directed web graph <math>G:(V, E)</math> with weighted nodes and edges, we determine a partition, such that

- Edge-Cut is minimized.

- The sum of node weights in each part is upper bounded by some K.

Since this is an NP-complete problem, we apply a heuristic algorithm. In our experiments, we choose an algorithm introduced by Karypis and Kumar in 1998 known as Fast Multilevel Graph Partitioning Algorithm. Below is the overview of the algorithm.

- Compute a maximal matching using a randomized algorithm.

- Coarsen the graph by collapsing the matched vertices together.

- Repeat Step 1~2 until a desired size of the coarsened graph is achieved.

- Compute minimum edge-cut bisection.

- Refine and uncoarsen the partitioned graph.