Webarc:Temporally Anchored Scoring Experiments: Difference between revisions

From Adapt

No edit summary |

No edit summary |

||

| (22 intermediate revisions by the same user not shown) | |||

| Line 10: | Line 10: | ||

==Queries== | ==Queries== | ||

Based on the AOL query log made briefly available in 2005, we build our temporal query load by extracting multi-term query phrases where the user selected an English Wikipedia article among the search results. Note that the different temporal contexts give different relative weights to each of the query terms, yielding different result rankings. In other words, for a query phrase with term t1 and t2, t1 may be given more weight than t2 at a certain query time span qts1, but it may be opposite at a different query time span qts2. This means that two document versions that belong to both qts1 and qts2 can have different rankings depending on the specified query time span. However, in the case of single-term queries, any two document versions will retain their ranking regardless of the specified query time span, as long as both belong to the query time span. In our experiments, we highlight the impact on the search results from different query time spans by focusing only on multi-term queries. The more general case where single-term queries are also included will be able to be inferred from the results | Based on the AOL query log made briefly available in 2005, we build our temporal query load by extracting multi-term query phrases where the user selected an English Wikipedia article among the search results. Note that the different temporal contexts give different relative weights to each of the query terms, yielding different result rankings. In other words, for a query phrase with term t1 and t2, t1 may be given more weight than t2 at a certain query time span qts1, but it may be opposite at a different query time span qts2. This means that two document versions that belong to both qts1 and qts2 can have different rankings depending on the specified query time span. However, in the case of single-term queries, any two document versions will retain their ranking regardless of the specified query time span, as long as both belong to the query time span. In our experiments, we highlight the impact on the search results from different query time spans by focusing only on multi-term queries. The more general case where single-term queries are also included will be able to be inferred from the results (It has been reported that about 80% of queries are multi-termed). | ||

Once we extract qualified query phrases, we further filter out less frequently appearing phrases. In particular, we take the query phrases that appear 10 times or more in the log. As a result, we gather a total of 223 such query phrases. | Once we extract qualified query phrases, we further filter out less frequently appearing phrases. In particular, we take the query phrases that appear 10 times or more in the log. As a result, we gather a total of 223 such query phrases. | ||

Using the 223 query phrases, we now construct a temporal query load by combining query time spans with each of the query phrases. Specifically, we use 8 query time span lengths: 1, 2, 4, 8, 16, 32, 64, and 83 months. For each query time span length, we make all possible query time spans, starting from the first month. For example, for the query time span length of two months, we make 82 query time spans: (February 1st 2001 ~ March 31st 2001), (March 1st 2001 ~ April 30th 2001), ..., (November 1st 2007 ~ December 31st 2007). A total of 462 (= 83 + 82 + 80 + 76 + 68 + 52 + 20 + 1) different query time spans are | Using the 223 query phrases, we now construct a temporal query load by combining query time spans with each of the query phrases. Specifically, we use 8 query time span lengths: 1, 2, 4, 8, 16, 32, 64, and 83 months. For each query time span length, we make all possible query time spans, starting from the first month. For example, for the query time span length of two months, we make 82 query time spans: (February 1st 2001 ~ March 31st 2001), (March 1st 2001 ~ April 30th 2001), ..., (November 1st 2007 ~ December 31st 2007). A total of 462 (= 83 + 82 + 80 + 76 + 68 + 52 + 20 + 1) different query time spans are used. In the end, we generate 103,026 (= 223 x 462) queries in each experiment session. | ||

[[Webarc:Temporal Query Load |The complete list of query terms and query time spans]] | [[Webarc:Temporal Query Load |The complete list of query terms and query time spans]] | ||

| Line 22: | Line 22: | ||

==Experiments== | ==Experiments== | ||

The experiments consist of eight experiment sessions, each with one of the following time window sizes: 1, 2, 4, 8, 16, 32, 64, and 83 month. For each experiment session, the temporal query load described above is fed into our search servers | The experiments consist of eight experiment sessions, each with one of the following time window sizes: 1, 2, 4, 8, 16, 32, 64, and 83-month. For each experiment session, the temporal query load described above is fed into our search servers. | ||

[[Webarc:Time Windows Used in Experiments|Time Windows Used in Experiments]] | [[Webarc:Time Windows Used in Experiments|Time Windows Used in Experiments]] | ||

When scoring relevance, approximated query / document statistics are used. For a query time span (tp<sub>1</sub> ~ tp<sub>2</sub>), we find a series of time windows tw<sub>i</sub> ~ tw<sub>j</sub> that completely cover (tp<sub>1</sub> ~ tp<sub>2</sub>). The statistics for the query time span is approximated by combining statistics of tw<sub>i</sub> ~ tw<sub>j</sub>. | |||

[[Webarc:Combining Statistics in Multiple Time Windows|How We Combine Statistics in Multiple Time Windows]] | |||

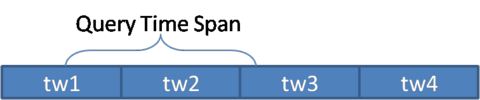

For example, if tw<sub>1</sub> ~ tw<sub>3</sub> completely overlaps a given query time span as in the figure below, we combine statistics of tw<sub>1</sub>, tw<sub>2</sub> and tw<sub>3</sub> and use them when scoring. | |||

<center>[[Image:stat_approximation.png|480px]]</center> | |||

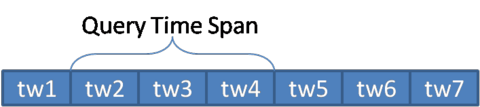

In the case where the time window size is a single month, a series of time windows always perfectly overlap with any query time span, and therefore we use the exact statistics found within the query time span. | |||

<center>[[Image:tw1.png|480px]]</center> | |||

Once we run all the eight experiment sessions, we obtain 64 result sets as shown in the table below. In the table, each row represents an experiment session with a given time window size, and each column represents a query time span size. In the entries of the table, the result set qts.''i''_tw.''j'' refers to the result set for the query time span size ''i'' and the time window size ''j''. The size of each result set varies, depending on the query time span size. For example, qts.''1''_tw.''x'' has search results for 18,509 (= 223 query phrases x 83 query time spans) queries, while qts.''83''_tw.''x'' has search results for only 223 (= 223 x 1) queries. | Once we run all the eight experiment sessions, we obtain 64 result sets as shown in the table below. In the table, each row represents an experiment session with a given time window size, and each column represents a query time span size. In the entries of the table, the result set qts.''i''_tw.''j'' refers to the result set for the query time span size ''i'' and the time window size ''j''. The size of each result set varies, depending on the query time span size. For example, qts.''1''_tw.''x'' has search results for 18,509 (= 223 query phrases x 83 query time spans) queries, while qts.''83''_tw.''x'' has search results for only 223 (= 223 x 1) queries. | ||

| Line 68: | Line 80: | ||

The second goal is to examine how approximating scoring parameters can affect the search results, depending on the time window size. | The second goal is to examine how approximating scoring parameters can affect the search results, depending on the time window size. | ||

When the time window size is a single month, any query time span will perfectly match one or more of consecutive time windows. Thus, the statistics that we use for any query time span will be exact ones. On the other hand, when the time window size of two or more months, many query time spans will end up covering parts of time windows, and we approximate the statistics for a query time span by using the combined statistics of all overlapping time windows. See [xx] for how we combine statistics of multiple time windows. | When the time window size is a single month, any query time span will perfectly match one or more of consecutive time windows. Thus, the statistics that we use for any query time span will be exact ones. On the other hand, when the time window size of two or more months, many query time spans will end up covering parts of time windows, and we approximate the statistics for a query time span by using the combined statistics of all overlapping time windows. See [xx] for how we combine statistics of multiple time windows. | ||

Therefore, we can examine the impact of time-window based approximation by comparing the search results set qts.''x''_tw.''y'' (y!=1) with qts.''x''_tw.''1''. for all ''x''s and ''y''s. For example, comparing qts.''1''_tw.''2'' with qts.''1''_tw.''1'' will show us how the search results are different when we use 2-month time windows in the case that the query time span is a single month. In particular, we compute | Therefore, we can examine the impact of time-window based approximation by comparing the search results set qts.''x''_tw.''y'' (y!=1) with qts.''x''_tw.''1''. for all ''x''s and ''y''s. For example, comparing qts.''1''_tw.''2'' with qts.''1''_tw.''1'' will show us how the search results are different when we use 2-month time windows in the case that the query time span is a single month. | ||

In particular, we compute the following metrics for all <math>r \in \{10, 20, 100\}</math>, <math>x \in \{1, 2, 4, 8, 16, 32, 64, 83\}</math>, and <math>y \in \{2, 4, 8, 16, 32, 64, 83\}</math>, where <math>r</math> is the number of search results, <math>x</math> is the query time span length, and <math>y</math> is the time window length. | |||

* Mean Recall: <font size="4"><math>mP_r(x, y)</math></font> | |||

* Mean Kendall's <math>\tau</math>: <font size="4"><math>mKT_r(x, y)</math></font> | |||

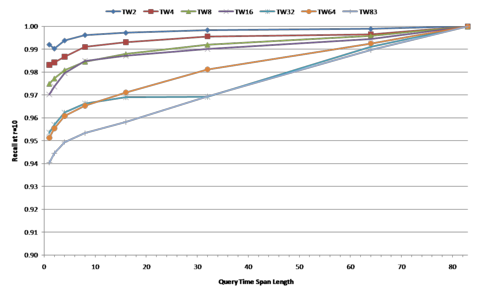

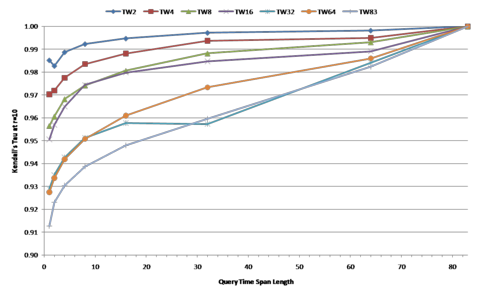

We plot mean recalls and kendall's <math>\tau</math>'s in graphs. Each of the graphs in the case where r=10 and Okapi BM25 is used as the scoring method is shown below. | |||

<center>[[Image:okapi_recall_r10.png|480px]]</center> | |||

<center>[[Image:okapi_kt_r10.png|480px]]</center> | |||

[[Webarc:Temporal Scoring Result Graphs |Complete Results]] | |||

==Further Information== | ==Further Information== | ||

* [[Webarc:Input Dataset Statistics |[1] Input Dataset Statistics]] | * [[Webarc:Input Dataset Statistics |[1] Input Dataset Statistics]] | ||

* [[Webarc:Temporal Query Load |[2] Temporal Query Load]] | * [[Webarc:Temporal Query Load |[2] Temporal Query Load]] | ||

* [[Webarc:Tools Developed |[ | * [[Webarc:Time Windows Used in Experiments| [3] Time Windows]] | ||

* [[Webarc:Combining Statistics in Multiple Time Windows| [4] How We Combine Statistics]] | |||

* [[Webarc:Tools Developed |[5] Tools Developed]] | |||

Latest revision as of 20:26, 25 November 2009

Goals

There are two main goals that we want to achieve through the following experiments.

- For time-constrained queries, we examine how temporally-anchored scoring can affect the search results in real-world time series data.

- For time-constrained queries with a time window indexing scheme, we examine how approximating scoring parameters can affect the search results, depending on the time window size.

Input Dataset

We preprocess the entire revision history of the English Wikipedia from 2001 to 2007. After preprocessing, we obtain 84 monthly snapshots starting from January 2001 ending in December 2007. Included in each monthly snapshot are the latest revision of existing articles at the end of the month. For example, the Wikipedia article 'Economy of the United States' created on August 21 2002 is included in the six monthly snapshots of August 2002, September 2002, ..., January 2003 since there is no newer revision made until February 7 2003, whereas the same article revised on August 16 2002 is not included in any of the snapshots.

Statistics of monthly snapshots

Queries

Based on the AOL query log made briefly available in 2005, we build our temporal query load by extracting multi-term query phrases where the user selected an English Wikipedia article among the search results. Note that the different temporal contexts give different relative weights to each of the query terms, yielding different result rankings. In other words, for a query phrase with term t1 and t2, t1 may be given more weight than t2 at a certain query time span qts1, but it may be opposite at a different query time span qts2. This means that two document versions that belong to both qts1 and qts2 can have different rankings depending on the specified query time span. However, in the case of single-term queries, any two document versions will retain their ranking regardless of the specified query time span, as long as both belong to the query time span. In our experiments, we highlight the impact on the search results from different query time spans by focusing only on multi-term queries. The more general case where single-term queries are also included will be able to be inferred from the results (It has been reported that about 80% of queries are multi-termed).

Once we extract qualified query phrases, we further filter out less frequently appearing phrases. In particular, we take the query phrases that appear 10 times or more in the log. As a result, we gather a total of 223 such query phrases.

Using the 223 query phrases, we now construct a temporal query load by combining query time spans with each of the query phrases. Specifically, we use 8 query time span lengths: 1, 2, 4, 8, 16, 32, 64, and 83 months. For each query time span length, we make all possible query time spans, starting from the first month. For example, for the query time span length of two months, we make 82 query time spans: (February 1st 2001 ~ March 31st 2001), (March 1st 2001 ~ April 30th 2001), ..., (November 1st 2007 ~ December 31st 2007). A total of 462 (= 83 + 82 + 80 + 76 + 68 + 52 + 20 + 1) different query time spans are used. In the end, we generate 103,026 (= 223 x 462) queries in each experiment session.

The complete list of query terms and query time spans

Scoring Methods

We use two different scoring methods; 1. a tf-idf based method (OKAPI BM-25, in particular) and 2. a language-model based method (the KL divergence combined with the Dirichlet's smoothing, in particular). For each query, we take 100 top documents from each scoring method.

Experiments

The experiments consist of eight experiment sessions, each with one of the following time window sizes: 1, 2, 4, 8, 16, 32, 64, and 83-month. For each experiment session, the temporal query load described above is fed into our search servers.

Time Windows Used in Experiments

When scoring relevance, approximated query / document statistics are used. For a query time span (tp1 ~ tp2), we find a series of time windows twi ~ twj that completely cover (tp1 ~ tp2). The statistics for the query time span is approximated by combining statistics of twi ~ twj.

How We Combine Statistics in Multiple Time Windows

For example, if tw1 ~ tw3 completely overlaps a given query time span as in the figure below, we combine statistics of tw1, tw2 and tw3 and use them when scoring.

In the case where the time window size is a single month, a series of time windows always perfectly overlap with any query time span, and therefore we use the exact statistics found within the query time span.

Once we run all the eight experiment sessions, we obtain 64 result sets as shown in the table below. In the table, each row represents an experiment session with a given time window size, and each column represents a query time span size. In the entries of the table, the result set qts.i_tw.j refers to the result set for the query time span size i and the time window size j. The size of each result set varies, depending on the query time span size. For example, qts.1_tw.x has search results for 18,509 (= 223 query phrases x 83 query time spans) queries, while qts.83_tw.x has search results for only 223 (= 223 x 1) queries.

| tw\qts | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 83 |

| 1 | qts.1_tw.1 | qts.2_tw.1 | qts.4_tw.1 | qts.8_tw.1 | qts.16_tw.1 | qts.32_tw.1 | qts.64_tw.1 | qts.83_tw.1 |

| 2 | qts.1_tw.2 | qts.2_tw.2 | qts.4_tw.2 | qts.8_tw.2 | qts.16_tw.2 | qts.32_tw.2 | qts.64_tw.2 | qts.83_tw.2 |

| 4 | qts.1_tw.4 | qts.2_tw.4 | qts.4_tw.4 | qts.8_tw.4 | qts.16_tw.4 | qts.32_tw.4 | qts.64_tw.4 | qts.83_tw.4 |

| 8 | qts.1_tw.8 | qts.2_tw.8 | qts.4_tw.8 | qts.8_tw.8 | qts.16_tw.8 | qts.32_tw.8 | qts.64_tw.8 | qts.83_tw.8 |

| 16 | qts.1_tw.16 | qts.2_tw.16 | qts.4_tw.16 | qts.8_tw.16 | qts.16_tw.16 | qts.32_tw.16 | qts.64_tw.16 | qts.83_tw.16 |

| 32 | qts.1_tw.32 | qts.2_tw.32 | qts.4_tw.32 | qts.8_tw.32 | qts.16_tw.32 | qts.32_tw.32 | qts.64_tw.32 | qts.83_tw.32 |

| 64 | qts.1_tw.64 | qts.2_tw.64 | qts.4_tw.64 | qts.8_tw.64 | qts.16_tw.64 | qts.32_tw.64 | qts.64_tw.64 | qts.83_tw.64 |

| 83 | qts.1_tw.83 | qts.2_tw.83 | qts.4_tw.83 | qts.8_tw.83 | qts.16_tw.83 | qts.32_tw.83 | qts.64_tw.83 | qts.83_tw.83 |

We run the eight-session experiments one more time with a different scoring method.

In total, we generate 1,648,416 queries (= 103,026 queries per session x 8 sessions x 2 scoring methods) and obtain about 160 million search results (each query returns up to 100 search results).

Analysis

Impact of Temporally-anchored Scoring

To reiterate, the first goal of the experiments is to examine how temporally-anchored scoring can yield different search results. In the traditional search scheme, a temporal query is carried out by first scoring documents with the document/term statistics in the entire collection, followed by filtering in only those that belong to the specified query time span. Apparently, our experiment session for time window size 83 perfectly emulates the traditional scheme, resulting in the same results sets as the traditional scheme would have. Thus, in the first analysis, we compare the following two cases:

- Scoring is performed based on the statistics of the entire history (i.e. the time window size is 83).

- Scoring is performed based on the actual query time span specified.

In particular, the search results set qts.x_tw.1 is compared against qts.x_tw.83. We compute precisions at r=10, 20 and 100 and Kendall's tau at r=10, 20 and 100.

We also find out one good temporal query example that can emphasize the importance of the temporally-anchored scoring.

Impact of Time-Window Based Approximation

The second goal is to examine how approximating scoring parameters can affect the search results, depending on the time window size. When the time window size is a single month, any query time span will perfectly match one or more of consecutive time windows. Thus, the statistics that we use for any query time span will be exact ones. On the other hand, when the time window size of two or more months, many query time spans will end up covering parts of time windows, and we approximate the statistics for a query time span by using the combined statistics of all overlapping time windows. See [xx] for how we combine statistics of multiple time windows. Therefore, we can examine the impact of time-window based approximation by comparing the search results set qts.x_tw.y (y!=1) with qts.x_tw.1. for all xs and ys. For example, comparing qts.1_tw.2 with qts.1_tw.1 will show us how the search results are different when we use 2-month time windows in the case that the query time span is a single month.

In particular, we compute the following metrics for all <math>r \in \{10, 20, 100\}</math>, <math>x \in \{1, 2, 4, 8, 16, 32, 64, 83\}</math>, and <math>y \in \{2, 4, 8, 16, 32, 64, 83\}</math>, where <math>r</math> is the number of search results, <math>x</math> is the query time span length, and <math>y</math> is the time window length.

- Mean Recall: <math>mP_r(x, y)</math>

- Mean Kendall's <math>\tau</math>: <math>mKT_r(x, y)</math>

We plot mean recalls and kendall's <math>\tau</math>'s in graphs. Each of the graphs in the case where r=10 and Okapi BM25 is used as the scoring method is shown below.